AI offers immense potential for innovation and transformation. However, its increasing ubiquity also raises ethical concerns that cannot be ignored. The responsible development and use of AI are crucial for ensuring this technology benefits society without causing unintended harm.

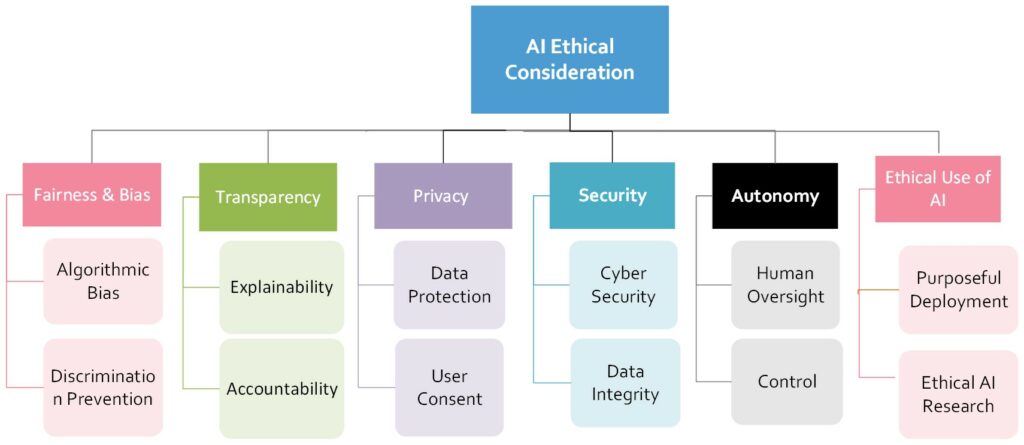

Let’s explore key ethical considerations surrounding AI tools:

- Bias and Discrimination

AI algorithms are only as unbiased as the data they’re trained on. If the data reflects societal biases, these biases can be perpetuated, potentially leading to discriminatory outcomes in areas such as hiring, lending, and even healthcare access. It’s vital to prioritize bias detection and mitigation throughout all stages of the AI development process.

- Transparency and Explainability

Many AI systems function as “black boxes.” It’s difficult to understand how they arrive at decisions, which can be problematic in high-stakes situations. Striving for increased explainability in AI helps ensure accountability, fosters trust, and reduces the risk of unintended consequences.

- Privacy and Data Security

AI is often data-hungry, requiring large volumes of personal information. This raises concerns about privacy, especially in the age of frequent data breaches. Robust data protection, user consent, and anonymization practices are crucial for upholding individual privacy rights.

- Job Displacement and Economic Impacts

As AI automates tasks, it has the potential to displace jobs. Careful consideration must be given to the economic repercussions of AI adoption. Strategies like retraining programs, workforce planning, and exploring new economic models might be needed to address this challenge fairly.

- Accountability and Responsibility

Who is liable if an AI system makes a harmful decision? Determining clear lines of accountability for both the developers and users of AI technology is critical. This may involve both legal frameworks and industry standards.

Building an Ethical AI Future

- Diversity in Development Teams Having diverse perspectives in the teams building AI systems helps minimize blind spots and better address potential biases.

- Proactive Ethical Frameworks: Companies and organizations need to adopt ethical AI principles that put fairness, transparency, and accountability at the forefront.

- Regulation and Governance: A balance is needed between innovation and regulation. Thoughtful regulations can set clear ethical boundaries for responsible AI usage.

- Ongoing Scrutiny and Evaluation: AI systems shouldn’t be seen as static. Constant monitoring and evaluation are needed to ensure they remain fair, safe, and beneficial to society as they evolve.

The Future of Ethical AI

Ethical AI is not merely a nice-to-have, it’s an imperative. Addressing these challenges requires a collaborative effort between researchers, developers, policymakers, and the public. By prioritizing responsible and ethical AI development, we can unlock the technology’s potential while ensuring it serves humanity in a just and equitable way.

0

0